close

由於學校專題的需要,製作此爬蟲系統

以下將先講解整個系統,與運作的方式

而後會詳述爬蟲程式的撰寫方式

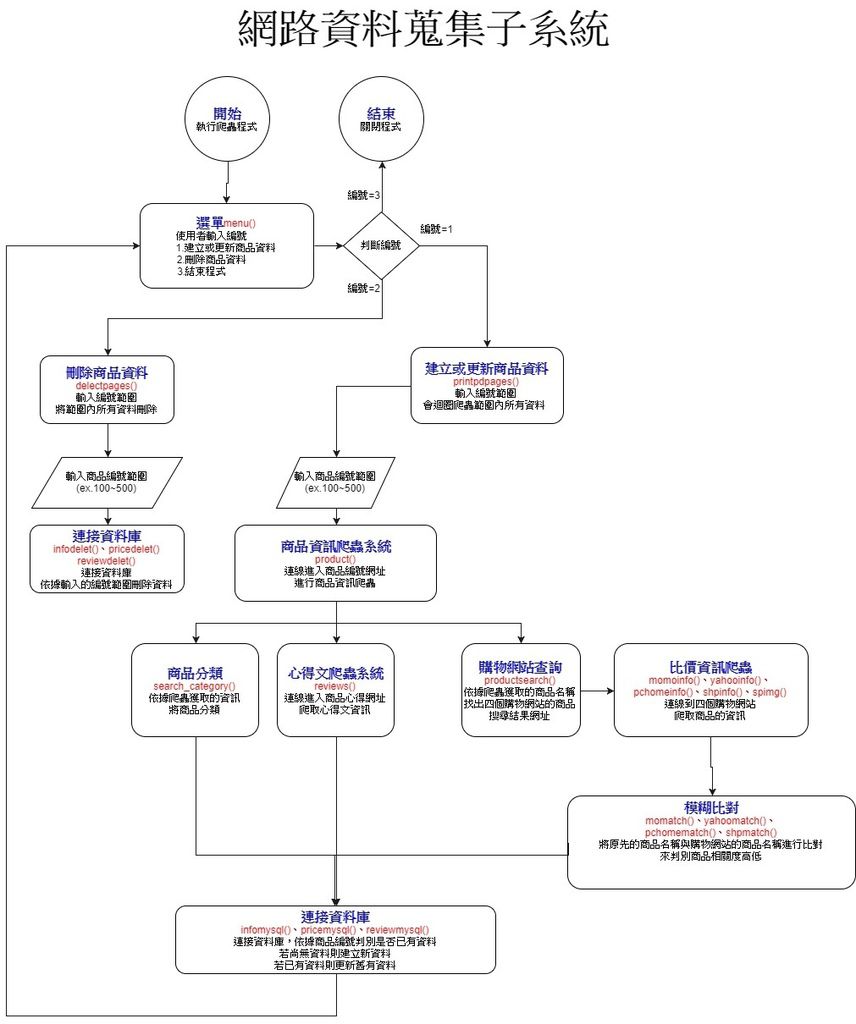

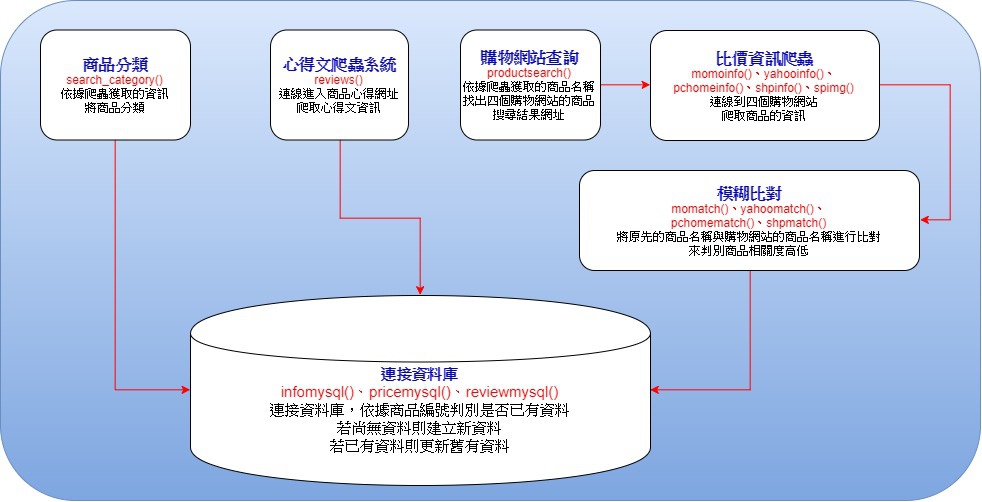

(一) 系統架構介紹

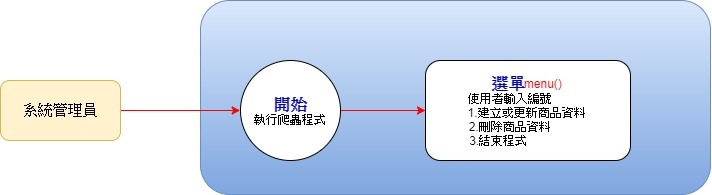

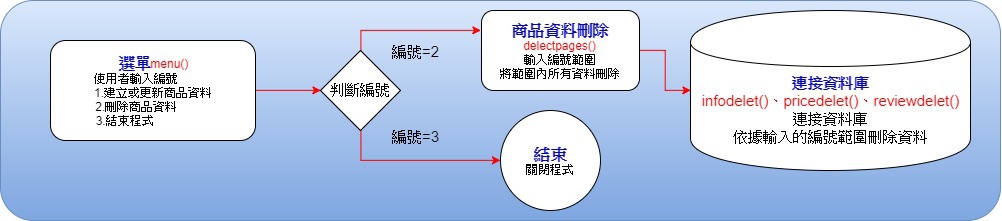

a、下圖為選單,有「建立或更新商品資料」、「刪除商品資料」、「結束」三個選項,依據管理者輸入的編號來決定行為。

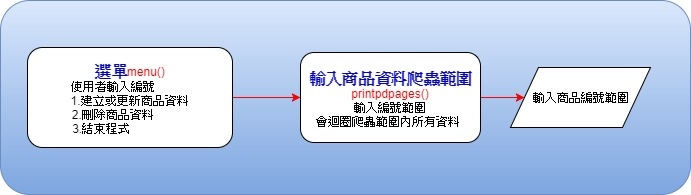

b、選擇1執行「建立或更新商品資料」,讓使用者輸入要查詢的起始商品編號與結尾商品編號,會爬行範圍內所有編號的資料。

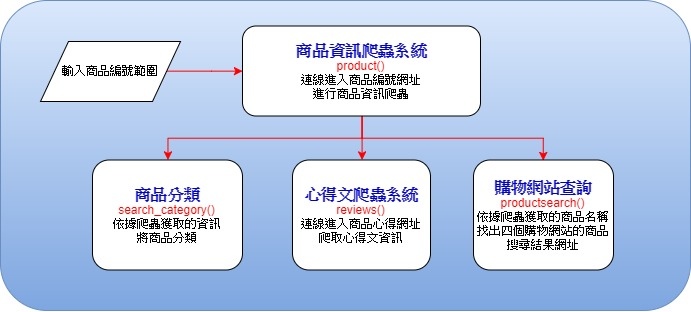

c、依據每筆商品編號,進行商品資訊爬蟲,再將商品進行分類、心得文資訊爬蟲、還有找出四個購物網站搜尋結果的網址。

d、取得四個購物網站搜尋結果網址後,分別連接至各網站進行商品資訊的爬蟲,再將原先的商品名稱與購物網站商品名稱作模糊比對來判斷相關度高低,再將剛才取得的商品資訊、商品分類、商品心得文資訊、購物網站比價資訊與相關度,傳至資料庫。

e、在資料庫依據商品編號判斷是否已有資料,若尚無資料則建立新資料,若已有資料則更新舊有資料。選擇2執行「刪除商品資料」,讓使用者輸入要刪除的起始商品編號與結尾商品編號,就會連接到資料庫,刪除範圍內所有編號的資料;選擇3執行「結束」,離開程式。

(二) 程式碼總攬

1.選單

#主選單 def menu(): print("========urcosme爬蟲========") print() print("請輸入選項代號") print("1.建立或更新商品資料") print("2.刪除商品資料") print("3.結束") print() print() print() print("========urcosme爬蟲========") #以下為主程式 from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlite3 while True: menu() try: choice=int(input("請輸入你的選擇:")) if choice == 3: break; elif choice == 1: printpdpages() elif choice == 2: delectpages() else: print("請輸入選項的代號") except: print("請輸入選項的代號") x = input("請按Enter鍵回主選單")

2.建立、更新或刪除商品資料

2.建立、更新或刪除商品資料

#頁數搜尋 from selenium import webdriver import json import requests from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlit def printpdpages(): global page try: pages1 = int(input("請輸入要爬取的起始商品編號")) pages2 = int(input("請輸入要爬取的結尾商品編號")) except: print("請輸入數值") if pages1 >= 1 and pages1 <= 85000 and pages2 >= pages1: for page in range(pages1,pages2+1): print("目前正在爬編號:",page) try: product('https://www.urcosme.com/products/{}'.format(page)) infomysql() pricemysql() reviewmysql() except HTTPError as e: print("該網址沒有商品") else: print("請輸入介於 1 ~ 85000 之間") def delectpages(): global page global numst global numed try: pages1 = int(input("請輸入要刪除的起始商品編號")) pages2 = int(input("請輸入要刪除的結尾商品編號")) numst = pages1 numed = pages2 except: print("請輸入數值") if pages1 >= 1 and pages1 <= 85000 and pages2 >= pages1: print("目前正在刪除編號: ",numst,"至編號: ",numed) try: infodelect() pricedelect() reviewdelect() except HTTPError as e: print("該網址沒有商品") else: print("請輸入介於 1 ~ 85000 之間")

3.商品資訊爬蟲系統

3.商品資訊爬蟲系統

#商品資訊爬蟲程式 def product(linkkk): global page global mcate global catt global cate global itemname #商品名稱 global img3 #商品圖片網址 global usedd #心得篇數 global usedpage #心得網址 global titlink #心得文前十則+網址 global psc #評分 global hotpoint #人氣 global prin1,prin2,prin3 #容量、價格、上市日期 global bdd #品牌 global titlink0,titlink1,titlink2,titlink3,titlink4,titlink5,titlink6,titlink7,titlink8,titlink9 global titq0,titq1,titq2,titq3,titq4,titq5,titq6,titq7,titq8,titq9 try: url = linkkk #選擇網址 user_agent = 'Mozilla/5.0 (Windows; U; Windows NT 6.1; zh-CN; rv:1.9.2.15) Gecko/20110303 Firefox/3.6.15' headers = {'User-Agent':user_agent} data_res = request.Request(url=url,headers=headers) data = request.urlopen(data_res) data = data.read().decode('utf-8') sp = BeautifulSoup(data, "lxml") #商品名稱 items = sp.findAll("span",{"itemprop":"name"}) itemnames = [] for item in items: itemnames.append(item.text) try: itemname = itemnames[3] except: print("此商品網址有誤") return print(itemname) #商品圖片 imgs = sp.find("div",{"class":"product-info-image"}).findAll("img", src = re.compile("\/product_image\/")) if imgs != []: for img in imgs: img3 = img['src'] else: img3 = '無圖片' print(img3) #使用心得 used =sp.findAll('span',{'class':'hint-text'}) if used != []: for use in used: usedd = use.text.strip('(').strip(')') usedpage = linkkk+'/reviews' else: usedd = '無心得' usedpage ='無心得' print("心得篇數:",usedd) print("心得文網址:",usedpage) #商品分數 productscore = sp.findAll("div",{"class":"product-info-score"}) for pscc in productscore: if pscc.text.strip('UrCosme指數 ') == '-.-': psc = '無評分' else: psc = pscc.text.strip('UrCosme指數 ') print("評分",psc) #人氣 hott = sp.findAll('div',{'class':'product-info-engagement-counts'}) for hot in hott: hots = hot.text hotss = re.search(r'(.*) \/ (.*) \/ (.*)',hots) hotpointtt = hotss.group(1) hotpointt = hotpointtt.strip('人氣 ') hotpoint = hotpointt.replace(',','') print(hotpoint) #容量、價格、上市日期 productlabel = sp.findAll("div",{"class":"other-label"}) prinlb = [] for prlabell in productlabel: prinlb.append(prlabell.text) #print(prinlb) productinfo = sp.findAll("div",{"class":"other-text"}) prinfo = [] for prinfoo in productinfo: prinfo.append(prinfoo.text) try: if prinlb[0] == '容量': prin1 = prinfo[0] else: prin1 ='未知' except: prin1 = '未知' try: if prinlb[0] == '價格': prin222 = prinfo[0] prin22 = prin222.strip('NT$ ') prin2 = prin22.replace('/',' ').split(' ')[0] elif prinlb[1] == '價格': prin222 = prinfo[1] prin22 = prin222.strip('NT$ ') prin2 = prin22.replace('/',' ').split(' ')[0] else: prin2 =' ' except: prin2 = ' ' try: if prinlb[0] == '上市日期': prin3 = prinfo[0] elif prinlb[1] == '上市日期': prin3 = prinfo[1] elif prinlb[2] == '上市日期': prin3 = prinfo[2] else: prin3 ='未知' except: prin3 = '未知' print("容量:",prin1,"價格:",prin2,"上市日期:",prin3) #子分類 try: productcategory = sp.find("div",{"class":"product-info-main-attr"}).findAll("a",{"class":"uc-main-link"}) pdct = [] if productcategory != []: for pdctt in productcategory: pdct.append(pdctt.text) catt = pdct[0] else: catt = '未分類' except: catt = '未分類' print("子分類:",catt) search_category() #品牌 brandd = sp.findAll("span",{"itemprop":"name"}) bd = [] for brd in brandd: bd.append(brd.text) bdd = bd[2] print("品牌:",bdd) #使用心得 used =sp.findAll('span',{'class':'hint-text'}) if used != []: for use in used: usedd = use.text.strip('(').strip(')') usedpage = url+'/reviews' else: usedd = '無心得' usedpage ='無心得' print("心得篇數:",usedd) print("心得文網址:",usedpage) #心得文前十則標題+網址 reviews(linkkk) #比價 productsearch(itemname) except HTTPError: print("該網址無效")

3.商品分類

3.商品分類

#分類檢索 def search_category(): global mcate global cate global catt if catt == '洗面皂' or catt =='洗面乳' or catt =='洗顏粉' or catt =='洗顏慕斯' or catt=='其它洗顏': cate = '洗臉' elif catt == '卸妝乳'or catt =='卸妝油'or catt =='卸妝露'or catt =='卸妝水'or catt =='卸妝霜'or catt =='眼唇卸妝'or catt =='卸妝棉'or catt =='其它卸妝': cate = '卸妝' elif catt == '乳液': cate = '乳液' elif catt == '乳霜': cate = '乳霜' elif catt == '凝霜': cate = '凝霜' elif catt == '凝膠': cate = '凝膠' elif catt == '化妝水': cate = '化妝水' elif catt == '導入液'or catt =='前導精華'or catt =='其它前導': cate = '前導' elif catt == '精華液'or catt =='精華油'or catt =='安瓶'or catt =='其它精華': cate = '精華' elif catt == '保養面膜'or catt =='清潔面膜': cate = '面膜' elif catt == '多功能保養': cate = '多功能保養' elif catt == '臉部去角質'or catt =='唇部去角質'or catt =='其它去角質': cate = '去角質' elif catt == '眼霜'or catt =='眼膜'or catt =='眼部精華'or catt =='睫毛液'or catt =='其它眼部保養': cate = '眼睫保養' elif catt == '護唇膏'or catt =='護唇精華'or catt =='唇膜'or catt =='其它唇部保養': cate = '唇部保養' elif catt == '臉部防曬': cate = '臉部防曬' elif catt == '身體防曬': cate = '身體防曬' elif catt == '隔離霜'or catt =='眼部打底'or catt =='其它妝前': cate = '妝前' elif catt == '遮瑕膏'or catt =='遮瑕筆'or catt =='眼部遮瑕'or catt =='其它遮瑕': cate = '遮瑕' elif catt == '粉底液'or catt =='粉餅'or catt =='粉霜'or catt =='氣墊粉餅'or catt =='BB霜'or catt =='CC霜'or catt =='其它粉底': cate = '粉底' elif catt == '蜜粉'or catt =='蜜粉餅'or catt =='其它定妝': cate = '定妝' elif catt == '眉筆'or catt =='眉粉'or catt =='染眉膏'or catt =='其它眉彩': cate = '眉彩' elif catt == '眼線筆'or catt =='眼線液'or catt =='眼線膠'or catt =='其它眼線': cate = '眼線' elif catt == '眼影盤'or catt =='眼影膏'or catt =='眼影筆'or catt =='眼影蜜'or catt =='其它眼影': cate = '眼影' elif catt == '睫毛膏'or catt =='睫毛底膏'or catt =='睫毛定型': cate = '睫毛' elif catt == '腮紅'or catt =='腮紅霜'or catt =='腮紅蜜'or catt =='氣墊腮紅'or catt =='其它腮紅': cate = '頰彩' elif catt == '修容棒'or catt =='修容餅'or catt =='其它修容': cate = '修容' elif catt == '唇膏'or catt =='唇筆'or catt =='唇線筆'or catt =='唇蜜'or catt =='唇釉'or catt =='唇露'or catt =='其它唇彩': cate = '唇彩' elif catt == '指甲油'or catt =='基底油'or catt =='護甲油'or catt =='去光水'or catt =='其它美甲工具': cate = '美甲' elif catt == '多功能彩妝': cate = '多功能彩妝' elif catt == '身體乳液'or catt =='身體乳霜'or catt =='身體按摩油'or catt =='身體去角質'or catt =='其它美體保養': cate = '美體保養' elif catt == '護手霜'or catt =='指緣油'or catt =='手膜'or catt =='其它手部保養': cate = '手部保養' elif catt == '足膜'or catt =='足部舒緩'or catt =='其它腿足保養': cate = '腿足保養' elif catt == '美胸霜'or catt =='其它保養': cate = '其它部位保養' elif catt == '私密保養'or catt =='私密清潔': cate = '私密護理' elif catt == '沐浴乳'or catt =='沐浴露'or catt =='肥皂'or catt =='入浴劑'or catt =='其它沐浴清潔': cate = '沐浴清潔' elif catt == '止汗膏'or catt =='爽身噴霧'or catt =='其它爽身制汗': cate = '爽身制汗' elif catt == '美白牙膏'or catt =='其它牙齒保養': cate = '牙齒保養' elif catt == '洗髮乳'or catt =='乾洗髮'or catt =='其它洗髮': cate = '洗髮' elif catt == '潤髮乳'or catt =='其它潤髮': cate = '潤髮' elif catt == '護髮乳'or catt =='護髮霜'or catt =='髮膜'or catt =='護髮油'or catt =='護髮素'or catt =='其它護髮': cate = '護髮' elif catt == '頭皮護理': cate = '頭皮護理' elif catt == '染髮劑'or catt =='泡泡染'or catt =='其它染髮': cate = '染髮' elif catt == '定型噴霧'or catt =='髮蠟'or catt =='髮膠'or catt =='髮乳'or catt =='慕斯'or catt =='髮妝水'or catt =='其它頭髮造型': cate = '頭髮造型' elif catt == '香水'or catt =='淡香水'or catt =='香精'or catt =='淡香精': cate = '香水香精' elif catt == '其它香水香氛': cate = '其它香水香氛' elif catt == '化妝棉'or catt =='洗臉工具'or catt =='臉部按摩'or catt=='其它臉部保養工具': cate = '臉部保養工具' elif catt == '刷具'or catt =='睫毛夾'or catt =='海綿粉撲'or catt =='假睫毛'or catt =='用具清潔'or catt =='其它彩妝工具': cate = '彩妝工具' elif catt == '沐浴工具'or catt =='身體按摩'or catt =='其它身體保養工具': cate = '身體保養工具' elif catt == '梳子'or catt =='洗髮工具'or catt =='頭皮按摩'or catt =='其它美髮工具': cate = '美髮工具' elif catt == '洗臉機'or catt =='吹風機'or catt =='其它美容家電': cate = '美容家電' elif catt == '未分類': cate = '未分類' else: cate ='未分類' if cate == '洗臉'or cate =='卸妝'or cate =='化妝水'or cate =='乳液'or cate =='乳霜'or cate =='凝霜'or cate =='凝膠'or cate =='前導'or cate =='精華'or cate =='面膜'or cate =='多功能保養': mcate = '基礎保養' elif cate == '去角質'or cate =='眼睫保養'or cate =='唇部保養'or cate =='進階保養': mcate = '進階護膚' elif cate == '臉部防曬'or cate =='身體防曬': mcate = '防曬' elif cate == '妝前'or cate =='遮瑕'or cate =='粉底'or cate =='定妝': mcate = '底妝' elif cate == '眉彩'or cate =='眼線'or cate =='眼影'or cate =='睫毛'or cate =='頰彩'or cate =='修容'or cate =='唇彩'or cate =='美甲'or cate =='多功能彩妝': mcate = '彩妝' elif cate == '美體保養'or cate =='手部保養'or cate =='腿足保養'or cate =='其它部位保養'or cate =='私密護理'or cate =='沐浴清潔'or cate =='爽身制汗'or cate =='牙齒保養': mcate = '身體保養' elif cate == '洗髮'or cate =='潤髮'or cate =='護髮'or cate =='頭皮護理'or cate =='染髮'or cate =='頭髮造型': mcate = '美髮' elif cate == '香水香精'or cate =='其它香水香氛': mcate = '香水香氛' elif cate == '臉部保養工具'or cate =='彩妝工具'or cate =='身體保養工具'or cate =='美髮工具'or cate =='美容家電': mcate = '美容工具' elif cate == '未分類': mcate = '未分類' print("類別:",mcate) print("分類:",cate)

4.心得文爬蟲

4.心得文爬蟲

def reviews(linkkk): global titlink0 global titlink1 global titlink2 global titlink3 global titlink4 global titlink5 global titlink6 global titlink7 global titlink8 global titlink9 global titq0 global titq1 global titq2 global titq3 global titq4 global titq5 global titq6 global titq7 global titq8 global titq9 linkkkk = linkkk + '/reviews' #心得文前十則標題+網址 url = linkkkk #選擇網址 user_agent = 'Mozilla/5.0 (Windows; U; Windows NT 6.1; zh-CN; rv:1.9.2.15) Gecko/20110303 Firefox/3.6.15' headers = {'User-Agent':user_agent} data_res = request.Request(url=url,headers=headers) data = request.urlopen(data_res) data = data.read().decode('utf-8') sp = BeautifulSoup(data, "lxml") titles = sp.findAll("div",{"class":"title-text single-dot uc-content-link"}) morelinks = sp.findAll("a",href = re.compile('\/reviews\/[0-9]')) tit=[] ml=[] for title in titles: tit.append(title.text) for mlss in morelinks: mls = 'https://www.urcosme.com'+str(mlss['href']) ml.append(str(mls)) titlink=[] titq=[] iws=0 for i in range(0,10): try: titlink.append(tit[i]) titq.append(ml[i]) except: iws+1 try: titlink0 =titlink[0] except IndexError: titlink0 = '' print(titlink0) try: titlink1 =titlink[1] except IndexError: titlink1 = '' print(titlink1) try: titlink2 =titlink[2] except IndexError: titlink2 = '' print(titlink2) try: titlink3 =titlink[3] except IndexError: titlink3 = '' print(titlink3) try: titlink4 =titlink[4] except IndexError: titlink4 = '' print(titlink4) try: titlink5 =titlink[5] except IndexError: titlink5 = '' print(titlink5) try: titlink6 =titlink[6] except IndexError: titlink6 = '' print(titlink6) try: titlink7 =titlink[7] except IndexError: titlink7 = '' print(titlink7) try: titlink8 =titlink[8] except IndexError: titlink8 = '' print(titlink8) try: titlink9 =titlink[9] except IndexError: titlink9 = '' print(titlink9) try: titq0 =titq[0] except IndexError: titq0 = '' print(titq0) try: titq1 =titq[1] except IndexError: titq1 = '' print(titq1) try: titq2 =titq[2] except IndexError: titq2 = '' print(titq2) try: titq3 =titq[3] except IndexError: titq3 = '' print(titq3) try: titq4 =titq[4] except IndexError: titq4 = '' print(titq4) try: titq5 =titq[5] except IndexError: titq5 = '' print(titq5) try: titq6 =titq[6] except IndexError: titq6 = '' print(titq6) try: titq7 =titq[7] except IndexError: titq7 = '' print(titq7) try: titq8 =titq[8] except IndexError: titq8 = '' print(titq8) try: titq9 =titq[9] except IndexError: titq9 = '' print(titq9)

5.購物網站網址查詢

5.購物網站網址查詢

#商品查詢 def productsearch(main): import urllib.parse global momosearch global pchomesearch global yahoosearch global shpsearch global itemname mains = urllib.parse.quote(str(main)) momo = 'https://www.momoshop.com.tw/search/searchShop.jsp?keyword=' momosearch = momo+mains pchome = 'http://ecshweb.pchome.com.tw/search/v3.3/all/results?q=' pchomesearch = pchome+mains yahoo = 'https://tw.search.mall.yahoo.com/search/mall/product?p=' yahoosearch = yahoo+mains shp1 = 'https://shopee.tw/search/?keyword=' shp2 ='&sortBy=sales' shpsearch =shp1+mains+shp2 print("momo購物網:") print(momosearch) momoinfo() print("Pchome24H購物:") print(pchomesearch) pchomeinfo() print("yahoo超級商城:") print(yahoosearch) yahooinfo() print("蝦皮拍賣") print(shpsearch) shpinfo()

6.購物網站比價爬蟲

6.購物網站比價爬蟲

def momoinfo(): global itemname global momosearch global moitn0 global moitp0 global moiturl0 global moitimg0 from selenium import webdriver from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlite3 driver = webdriver.PhantomJS(executable_path=r'C:\selenium_driver_chrome\phantomjs-2.1.1-windows\bin\phantomjs.exe') # PhantomJs driver.get(momosearch) # 輸入範例網址,交給瀏覽器 ps = driver.page_source # 取得網頁原始碼 sp = BeautifulSoup(ps, "lxml") try: moitemname = sp.findAll("p",{'class':'prdName'}) moitemprice =sp.findAll("span",{'class':'price'}) moitemurl = sp.findAll("a",href = re.compile("\/goods\/")) moitemimg = sp.find("a",{'class':'goodsUrl'}).findAll("img", src = re.compile("http")) moitn=[] moitp=[] moiturl=[] moitimg=[] mourll='https://www.momoshop.com.tw/' for itnn in moitemname: moitn.append(itnn.text) for itpp in moitemprice: moitp.append(itpp.text) for iturl in moitemurl: moiturl.append(mourll+iturl['href']) for itimm in moitemimg: moitimg.append(itimm['src']) ##['src']要根據for的變數 moitn0 =moitn[0] moitp0=moitp[0] moiturl0=moiturl[0] moitimg0=moitimg[0] except: moitn0 = "噢哦,查無相關資料" moitp0 = "噢哦,查無相關資料" moiturl0 = "" moitimg0= "http://cosmepick.xyz/images/005.jpg" momatch(moitn0) print(moitn0) print(moitp0) print(moiturl0) print('img') print(moitimg0) driver.close() def pchomeinfo(): global pchomesearch global itemname global pcitems0 global pcprices0 global pcurls0 global pcimg0 import json import re import requests from bs4 import BeautifulSoup as bs res = requests.get(pchomesearch)#分析得出的網址 ress = res.text jd = json.loads(ress) pcitems=[] #建立一個串列,將資料一一放入,方便日後提取出第一項資料 pcprices=[] pcurls=[] pcmainurl='http://24h.pchome.com.tw/prod/' pcimgurl='http://a.ecimg.tw' try: for item in jd['prods']: pcitems.append(item['name']) pcprices.append(item['price']) url=pcmainurl+item['Id'] pcurls.append(url) pcimg.append(pcimgurl+item['picB']) pcitems0 =pcitems[0] pcprices0 =pcprices[0] pcurls0 =pcurls[0] pcimg0 =pcimg[0] except: pcitems0 = "噢哦,查無相關資料" pcprices0 = "噢哦,查無相關資料" pcurls0 = "" pcimg0 = "http://cosmepick.xyz/images/005.jpg" pcmatch(pcitems0) print(pcitems0) #這裡提取第一項資料的名稱與價格 print(pcprices0) print(pcurls0) print(pcimg0) def yahooinfo(): global yahoosearch global itemname global yaitn0 global yaitp0 global yaitul0 global yaitimg0 from selenium import webdriver from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlite3 driver = webdriver.PhantomJS(executable_path=r'C:\selenium_driver_chrome\phantomjs-2.1.1-windows\bin\phantomjs.exe') # PhantomJs driver.get(yahoosearch) # 輸入範例網址,交給瀏覽器 ps = driver.page_source # 取得網頁原始碼 sp = BeautifulSoup(ps, "lxml") try: yaitemname=sp.find("div",{"class":"srp-pdtitle"}).findAll("a", title = re.compile(""), href =re.compile("http")) yaitemprice=sp.findAll("em",{"class":"yui3-u"}) yaitemimg=sp.find("div",{"class":"srp-pdimage"}).findAll("img", src =re.compile("http")) yaitn=[] yaitp=[] yaitul=[] yaitimg=[] for itnn in yaitemname: yaitn.append(itnn['title']) for itpp in yaitemprice: yaitp.append(itpp.text) for itull in yaitemname: yaitul.append(itull['href']) for itimgg in yaitemimg: yaitimg.append(itimgg['src']) yaitn0=yaitn[0] yaitp0=yaitp[0] yaitul0=yaitul[0] yaitimg0=yaitimg[0] except: yaitn0 = "噢哦,查無相關資料" yaitp0 = "噢哦,查無相關資料" yaitul0= "" yaitimg0="http://cosmepick.xyz/images/005.jpg" yamatch(yaitn0) print(yaitn0) print(yaitp0) print(yaitul0) print(yaitimg0) driver.close() def shpinfo(): global itemname global shpsearch global spitems0 global spprices0 global spurls0 global spimgs0 from selenium import webdriver from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlite3 driver = webdriver.PhantomJS(executable_path=r'C:\selenium_driver_chrome\phantomjs-2.1.1-windows\bin\phantomjs.exe') # PhantomJs driver.get(shpsearch) # 輸入範例網址,交給瀏覽器 ps = driver.page_source # 取得網頁原始碼 sp = BeautifulSoup(ps, "lxml") try: spitemss =sp.findAll("div",{"class":"shopee-item-card__text-name"}) sppricess =sp.findAll("div",{"class":"shopee-item-card__current-price"}) spurlss =sp.find("div",{"class":"shopee-search-result-view__item-card"}).findAll("a", href= re.compile("\/")) spitems=[] spprices=[] spurls=[] sprull = 'https://shopee.tw' for spitem in spitemss: spitems.append(spitem.text) for spprice in sppricess: spprices.append(spprice.text) for spurl in spurlss: spurls.append(sprull+spurl['href']) spitems0 = spitems[0] spprices0 = spprices[0] spurls0 =spurls[0] spimg() except: spitems0 = "噢哦,查無相關資料" spprices0 = "噢哦,查無相關資料" spurls0 = "" spimgs0 = 'http://cosmepick.xyz/images/005.jpg' ##網頁加入<img>標籤 使005.jpg成為無圖片時的替代圖片 shpmatch(spitems0) print(spitems0) print(spprices0) print(spurls0) print(spimgs0) driver.close() def spimg(): global spurls0 global spimgs0 from selenium import webdriver from urllib import request #導入模組 from bs4 import BeautifulSoup #導入模組 from urllib.parse import urlparse #導入模組 from urllib.request import urlopen from urllib.error import HTTPError import re import sqlite3 driver = webdriver.PhantomJS(executable_path=r'C:\selenium_driver_chrome\phantomjs-2.1.1-windows\bin\phantomjs.exe') # PhantomJs driver.get(spurls0) # 輸入範例網址,交給瀏覽器 ps = driver.page_source # 取得網頁原始碼 sp = BeautifulSoup(ps, "lxml") spimgs=[] urlcrack='https://i0.wp.com/c' try: spimgss =sp.find("div",{"class":"shopee-image-gallery__main"}).findAll("div", style=re.compile("")) for spimg in spimgss: spimgg = spimg['style'].strip('background-image: url(https://').strip(');') spimgs.append(urlcrack + spimgg) spimgs0 =spimgs[0] except: spimgs0 = 'http://cosmepick.xyz/images/005.jpg' ##網頁加入<img>標籤 使005.jpg成為無圖片時的替代圖片 driver.close()

7.模糊比對

7.模糊比對

#模糊匹配 #match #字元模糊匹配,設 a 為查詢的商品名稱 #設aa為商品名稱的每個字 aaa為 串列 def momatch(a): global itemname global moans itemmm = str(itemname) aaa=[] a_count = 0 b_count = 0 for aa in a: if aa in itemmm: aaa.append('yes') a_count += 1 else: aaa.append('no') b_count += 1 result = (a_count/(a_count+b_count)) #匹配成功的比率 print(result) moy = "相關" mon = "類似" print("查詢是商品為",itemmm) print("找到的商品為",a) if result >= 0.7: moans = moy print(moans) else: moans =mon print(moans) #字元模糊匹配,設 a 為查詢的商品名稱 #設aa為商品名稱的每個字 aaa為 串列 def yamatch(a): global itemname global yaans itemmm = str(itemname) aaa=[] a_count = 0 b_count = 0 for aa in a: if aa in itemmm: aaa.append('yes') a_count += 1 else: aaa.append('no') b_count += 1 result = (a_count/(a_count+b_count)) #匹配成功的比率 print(result) yay = "相關" yan = "類似" if result >= 0.7: yaans = yay print(yaans) else: yaans =yan print(yaans) #字元模糊匹配,設 a 為查詢的商品名稱 #設aa為商品名稱的每個字 aaa為 串列 def pcmatch(a): global itemname global pcans itemmm = str(itemname) aaa=[] a_count = 0 b_count = 0 for aa in a: if aa in itemmm: aaa.append('yes') a_count += 1 else: aaa.append('no') b_count += 1 result = (a_count/(a_count+b_count)) #匹配成功的比率 print(result) pcy = "相關" pcn = "類似" if result >= 0.7: pcans = pcy print(pcans) else: pcans =pcn print(pcans) #字元模糊匹配,設 a 為查詢的商品名稱 #設aa為商品名稱的每個字 aaa為 串列 def shpmatch(a): global shpans global itemname itemmm = str(itemname) aaa=[] a_count = 0 b_count = 0 for aa in a: if aa in itemmm: aaa.append('yes') a_count += 1 else: aaa.append('no') b_count += 1 result = (a_count/(a_count+b_count)) #匹配成功的比率 print(result) shpy = "相關" shpn = "類似" if result >= 0.7: shpans = shpy print(shpans) else: shpans =shpn print(shpans)

8.連接資料庫

#商品資訊新增修改刪除 import pymysql def infomysql(): global page global mcate global catt global cate global itemname #商品名稱 global img3 #商品圖片網址 global psc #評分 global hotpoint #人氣 global prin1,prin2,prin3 #容量、價格、上市日期 global bdd #品牌 db = conn = pymysql.connect( user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sqlstr = "SELECT * FROM `info` WHERE info_page = '%s'" % (page) try: cursor.execute(sqlstr) results = cursor.fetchall() if len(results) == 0: try: cursor.execute('INSERT INTO `info` (`info_page`,`info_mcate`,`info_cate`,`info_catt`,`info_bdd`,`info_itemname`, `info_img3`, `info_usedd`, `info_prin1`, `info_prin2`, `info_prin3`, `info_hotpoint`)values("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")'%(page,mcate,cate,catt,bdd,itemname,img3,prin1,prin2,prin3,psc,hotpoint)) print("商品資訊成功儲存新文章") except: print("此文章有特殊字元") db.commit() db.close() elif len(results) >= 1: sql = " UPDATE info SET info_mcate = '%s',info_cate = '%s',info_catt = '%s',info_bdd = '%s',info_itemname = '%s',info_img3 = '%s',info_usedd = '%s',info_prin1 = '%s',info_prin2 = '%s',info_prin3 = '%s',info_hotpoint = '%s' WHERE info_page = '%s' " date =(mcate,cate,catt,bdd,itemname,img3,prin1,prin2,prin3,psc,hotpoint,page) cursor.execute(sql%date) print( ' 商品資訊成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("此文章有例外情況無法存入") db.commit() db.close() #修改 import pymysql def infoupdate(): global page global mcate global catt global cate global itemname #商品名稱 global img3 #商品圖片網址 global psc #評分 global hotpoint #人氣 global prin1,prin2,prin3 #容量、價格、上市日期 global bdd #品牌 db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) try: db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = " UPDATE info SET info_mcate = '%s',info_cate = '%s',info_catt = '%s',info_bdd = '%s',info_itemname = '%s',info_img3 = '%s',info_usedd = '%s',info_prin1 = '%s',info_prin2 = '%s',info_prin3 = '%s',info_hotpoint = '%s' WHERE info_page = '%s' " date =(mcate,cate,catt,bdd,itemname,img3,prin1,prin2,prin3,psc,hotpoint,page) cursor.execute(sql%date) print( ' 商品資訊成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("缺少修改項目,請重新查詢") #刪除 def infodelect(): global numst global numed db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = "DELETE FROM info WHERE info_page >= '%s' AND info_page <= '%s'"%(numst,numed) cursor.execute(sql) print( ' 商品資訊成功刪除', cursor.rowcount, ' 條數據' ) db.commit() db.close()

#商品比價新增修改刪除 import pymysql def pricemysql(): db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sqlstr = "SELECT * FROM `price` WHERE price_page = '%s'" % (page) try: cursor.execute(sqlstr) results = cursor.fetchall() if len(results) == 0: try: cursor.execute('INSERT INTO `price` (`price_page`,`price_momolike`, `price_momoname`, `price_momoprice`, `price_momourl`, `price_momoimg`, `price_yahoolike`, `price_yahooname`, `price_yahooprice`, `price_yahoourl`, `price_yahooimg`, `price_pchomelike`, `price_pchomename`, `price_pchomeprice`, `price_pchomeurl`, `price_pchomeimg`, `price_shplike`, `price_shpname`, `price_shpprice`, `price_shpurl`, `price_shpimg`)values("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")'%(page,moans,moitn0,moitp0,moiturl0,moitimg0,yaans,yaitn0,yaitp0,yaitul0,yaitimg0,pcans,pcitems0,pcprices0,pcurls0,pcimg0,shpans,spitems0,spprices0,spurls0,spimgs0)) print("商品比價成功儲存新文章") except: print("此文章有特殊字元") db.commit() db.close() elif len(results) >= 1: sql = " UPDATE price SET price_momolike = '%s',price_momoname = '%s',price_momoprice = '%s',price_momourl = '%s',price_momoimg = '%s',price_yahoolike = '%s',price_yahooname = '%s',price_yahooprice = '%s',price_yahoourl = '%s',price_yahooimg = '%s',price_pchomelike = '%s',price_pchomename = '%s',price_pchomeprice = '%s',price_pchomeurl = '%s',price_pchomeimg = '%s',price_shplike = '%s',price_shpname = '%s',price_shpprice = '%s',price_shpurl = '%s' ,price_shpimg = '%s' WHERE price_page = '%s' " date =(moans,moitn0,moitp0,moiturl0,moitimg0,yaans,yaitn0,yaitp0,yaitul0,yaitimg0,pcans,pcitems0,pcprices0,pcurls0,pcimg0,shpans,spitems0,spprices0,spurls0,spimgs0,page) cursor.execute(sql%date) print( ' 商品比價成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("此文章有例外情況無法存入") db.commit() db.close() #修改 import pymysql def priceupdate(): try: db = conn = pymysql.connect( user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = " UPDATE price SET price_momolike = '%s',price_momoname = '%s',price_momoprice = '%s',price_momourl = '%s',price_momoimg = '%s',price_yahoolike = '%s',price_yahooname = '%s',price_yahooprice = '%s',price_yahoourl = '%s',price_yahooimg = '%s',price_pchomelike = '%s',price_pchomename = '%s',price_pchomeprice = '%s',price_pchomeurl = '%s',price_pchomeimg = '%s',price_shplike = '%s',price_shpname = '%s',price_shpprice = '%s',price_shpurl = '%s' ,price_shpimg = '%s' WHERE price_page = '%s' " date =(moans,moitn0,moitp0,moiturl0,moitimg0,yaans,yaitn0,yaitp0,yaitul0,yaitimg0,pcans,pcitems0,pcprices0,pcurls0,pcimg0,shpans,spitems0,spprices0,spurls0,spimgs0,page) cursor.execute(sql%date) print( ' 商品比價成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("缺少修改項目,請重新查詢") #刪除 def pricedelect(): global numst global numed try: db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = "DELETE FROM price WHERE price_page >= '%s' AND price_page <= '%s'"%(numst,numed) cursor.execute(sql) print( ' 商品比價成功刪除', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("該編號已無資料")

#商品心得新增修改刪除 import pymysql def reviewmysql(): global page global usedd global usedpage global titlink0 global titq0 global titlink1 global titq1 global titlink2 global titq2 global titlink3 global titq3 global titlink4 global titq4 global titlink5 global titq5 global titlink6 global titq6 global titlink7 global titq7 global titlink8 global titq8 global titlink9 global titq9 db = conn = pymysql.connect( user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sqlstr = "SELECT * FROM `review` WHERE review_page = '%s'" % (page) try: cursor.execute(sqlstr) results = cursor.fetchall() if len(results) == 0: try: cursor.execute('INSERT INTO `review` (`review_page`,`review_usedd`,`review_usedpage`,`review_tit1`,`review_titurl1`,`review_tit2`, `review_titurl2`, `review_tit3`, `review_titurl3`, `review_tit4`, `review_titurl4`, `review_tit5`, `review_titurl5`, `review_tit6`, `review_titurl6`, `review_tit7`, `review_titurl7`, `review_tit8`, `review_titurl8`, `review_tit9`, `review_titurl9`, `review_tit10`, `review_titurl10`)values("%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")'%(page,usedd,usedpage,titlink0,titq0,titlink1,titq1,titlink2,titq2,titlink3,titq3,titlink4,titq4,titlink5,titq5,titlink6,titq6,titlink7,titq7,titlink8,titq8,titlink9,titq9)) print("商品心得成功儲存新文章") except: print("此文章有特殊字元") db.commit() db.close() elif len(results) >= 1: sql = " UPDATE review SET review_usedd = '%s',review_usedpage = '%s',review_tit1 = '%s',review_titurl1 = '%s',review_tit2 = '%s',review_titurl2 = '%s',review_tit3 = '%s',review_titurl3 = '%s',review_tit4 = '%s',review_titurl4 = '%s',review_tit5 = '%s',review_titurl5 = '%s',review_tit6 = '%s',review_titurl6 = '%s',review_tit7 = '%s',review_titurl7 = '%s',review_tit8 = '%s',review_titurl8 = '%s',review_tit9 = '%s',review_titurl9 = '%s',review_tit10 = '%s',review_titurl10 = '%s' WHERE review_page = '%s' " date =(usedd,usedpage,titlink0,titq0,titlink1,titq1,titlink2,titq2,titlink3,titq3,titlink4,titq4,titlink5,titq5,titlink6,titq6,titlink7,titq7,titlink8,titq8,titlink9,titq9,page) cursor.execute(sql%date) print( ' 商品心得成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("此文章有例外情況無法存入") db.commit() db.close() #修改 import pymysql def reviewupdate(): global page global usedd global usedpage global titlink0 global titq0 global titlink1 global titq1 global titlink2 global titq2 global titlink3 global titq3 global titlink4 global titq4 global titlink5 global titq5 global titlink6 global titq6 global titlink7 global titq7 global titlink8 global titq8 global titlink9 global titq9 try: db = conn = pymysql.connect( user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = " UPDATE review SET review_usedd = '%s',review_usedpage = '%s',review_tit1 = '%s',review_titurl1 = '%s',review_tit2 = '%s',review_titurl2 = '%s',review_tit3 = '%s',review_titurl3 = '%s',review_tit4 = '%s',review_titurl4 = '%s',review_tit5 = '%s',review_titurl5 = '%s',review_tit6 = '%s',review_titurl6 = '%s',review_tit7 = '%s',review_titurl7 = '%s',review_tit8 = '%s',review_titurl8 = '%s',review_tit9 = '%s',review_titurl9 = '%s',review_tit10 = '%s',review_titurl10 = '%s' WHERE review_page = '%s' " date =(usedd,usedpage,titlink0,titq0,titlink1,titq1,titlink2,titq2,titlink3,titq3,titlink4,titq4,titlink5,titq5,titlink6,titq6,titlink7,titq7,titlink8,titq8,titlink9,titq9,page) cursor.execute(sql%date) print( ' 商品心得成功修改', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("缺少修改項目,請重新查詢") #刪除 def reviewdelect(): global numst global numed try: db = conn = pymysql.connect( #資料庫內容請根據自己的資料庫自行設定 user='root', passwd='00000000', database='item', charset='utf8',) cursor = db.cursor() sql = "DELETE FROM review WHERE review_page >= '%s' AND review_page <= '%s'"%(numst,numed) cursor.execute(sql) print( ' 商品心得成功刪除', cursor.rowcount, ' 條數據' ) db.commit() db.close() except: print("該編號已無資料")

文章標籤

全站熱搜

留言列表

留言列表